A few weeks ago, I was with a group of CTOs when someone asked: does your company let your engineers use AI tools like Copilot or ChatGPT?

I thought the question was strange. What do you mean let? They're going to use it no matter what you say. AI code generation tools offer engineers a huge productivity boost. The ability to autocomplete code in seconds or work through a problem with AI isn’t an opportunity developers will pass up.

When we drilled into why this group was reluctant to allow their engineers to use AI, it became apparent that their reservations centered primarily on one concern: the absence of a robust testing framework to give them confidence in the code generated by AI.

But this is still flawed reasoning. If you’re not confident in using AI, how can you be confident in hiring new grads? If you don’t have the tools to have confidence in your code, it doesn’t matter where that code comes from–you’ll always struggle with quality.

A Tale As Old as Stack Overflow

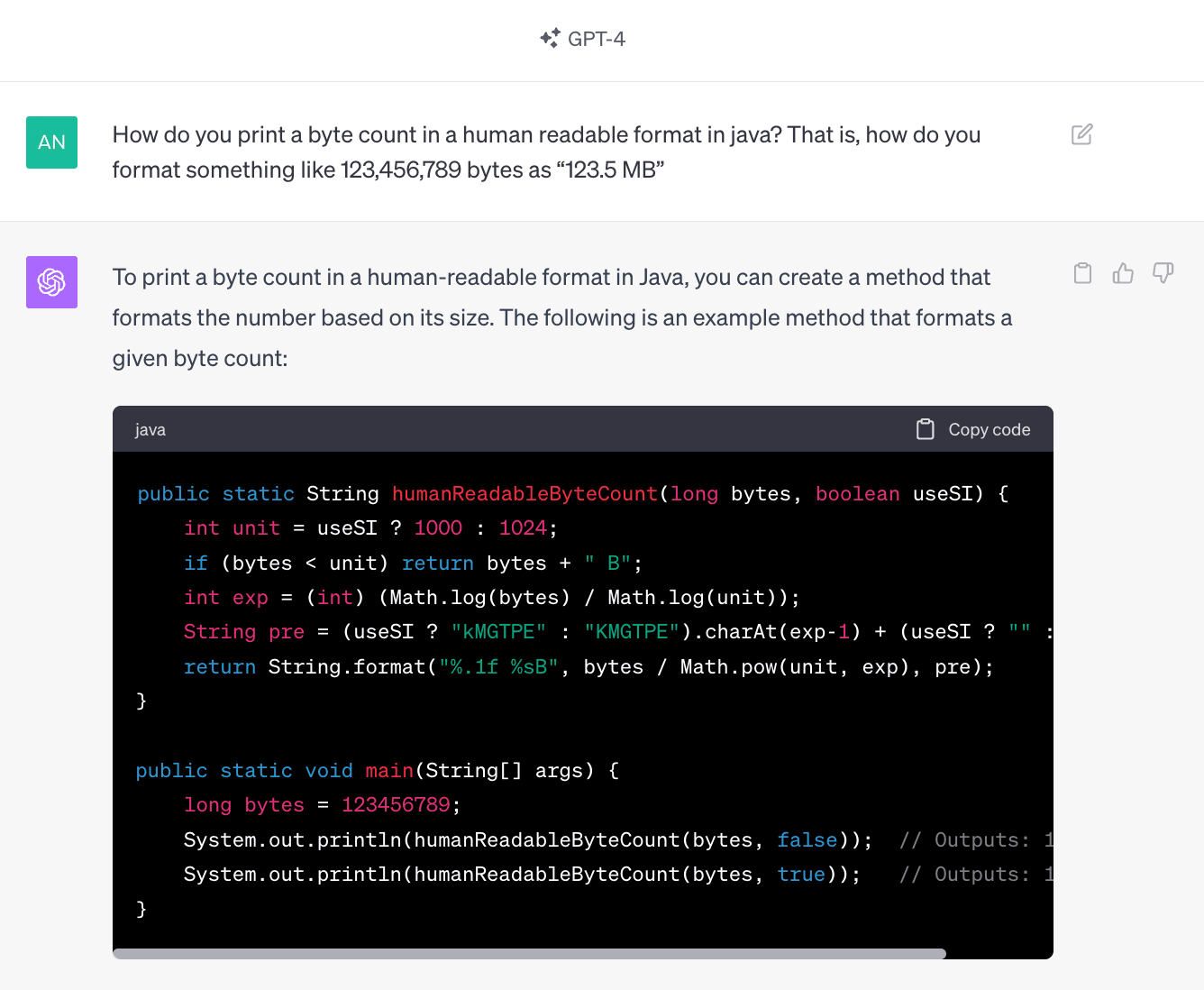

Recently, an old post resurfaced on Hacker News: The most copied Stack Overflow snippet of all time is flawed!

The author found almost 7,000 references to a Java snippet he wrote on SO in GitHub repositories. And that snippet is flawed.

This is what developers do. If they hit a wall, they’ll head over to Stack Overflow, docs, or tutorials to see how other engineers have solved the problem. If they find the right solution, they might work through it from first principles to code their own solution, or they might just grab it and dump it into their code.

Some take this very far. From the comments in that HN thread:

I once worked with a developer who wouldn’t let anything come between him seeing an answer and copying it into his code. He wasn’t even reading the question to make sure it was the same problem he was having, let alone the answer. He would literally go Google => follow the first link to Stack Overflow he saw => copy and paste the first code block he saw.

Engineering leads see AI as a new phenomenon that will hurt the quality of their codebase. However this is just the latest iteration of developer time-saving. Fresh grads, junior developers, and plenty of senior developers have been pushing poor code to production for years. Poor code is a constant. But, it doesn’t have to be.

We can consider AI to be an inexperienced developer because it knows just enough to be dangerous. AI understands how to construct code in response to a given problem and can do that repeatedly, generating thousands of snippets, but it doesn’t understand the nuances of engineering. However, it lacks a deep appreciation for the intricacies of engineering, such as optimization, security, or the critical nature of error checking.

Code generating AI is trained on billions of lines of code, but the training set code is not guaranteed to be correct. “Garbage in, garbage out” and “Just because it’s on the Internet doesn’t mean it’s true”. A good representation of this is the SO snippet above. If we ask ChatGPT the same question, the response we get is word-for-word that flawed Stack Overflow answer:

The problem isn’t the people or the AI. It’s a lack of guardrails to prevent poor-quality code from hitting your codebase.

Adding Guardrails Into Your Workflow

Introducing AI into your development process undeniably increases the speed of production, but without proper checks, it also increases the risk of introducing bugs, security vulnerabilities, and inefficient code. Research from Stanford University has shown that AI-generated code tends to include more bugs that are elusive even to seasoned developers.Those issues must be addressed in the review phase by senior engineers, who must also look for bugs in the AI code that was generated.

The answer isn’t to ban AI usage. You want its benefits. You want that increased productivity. The answer is to build a workflow that protects the codebase's integrity and reduces the burden on the senior team. This can be done by adding robust testing protocols. Trunk Check serves as this critical layer of defense. With automated tests, reviews transition from being exhaustive manual endeavors to more strategic, higher-level analyses focused on functionality and design principles. The rest of the review is automated: checking for bugs, formatting the code correctly, meeting internal quality standards, and checking for security issues.

That last point, security, is critical. The training data for ChatGPT only extends up to April 2023, and since then, new security vulnerabilities have undoubtedly emerged. Consequently, AI-generated code might include third-party libraries that are susceptible to these newer vulnerabilities. These issues may not always be detected during manual code reviews. Even a diligent engineer aware of an exploit in a recently incorporated library might not be familiar with all the vulnerabilities that affect the complete library ecosystem.

This is where Trunk Check proves invaluable. It uses security tools that are regularly updated with the latest lists of vulnerabilities, ensuring that any library with known security issues is immediately identified and flagged for review.

Automating the Burden

Just as your engineers are automating the boring and monotonous tasks in coding, you can automate the boring and monotonous tasks in reviewing. This is better for senior engineers. They can concentrate on the core review process without worrying about the overall quality of the code.

Junior engineers are empowered to learn and improve from automated feedback. They can continue to use AI, but they can also use these automated checks as a learning tool. Every time a Trunk Check fails on errors, they learn how to produce high-quality code. Trunk Check works directly in VS Code, so they can see the errors they and AI are producing and fix them even before the review stage.

Finally, automation is also better for companies. They can increase their production rate using AI code generation tools, confident that the code in their product is still high quality and secure. They can also scale their teams, knowing they won’t overburden senior engineers.

To start automating your reviews of AI (or human) generated code, all you need to do is set up Trunk Check and make sure it is updated to the latest version. If you’re new to Trunk, you can try it for free or request a demo to get started.